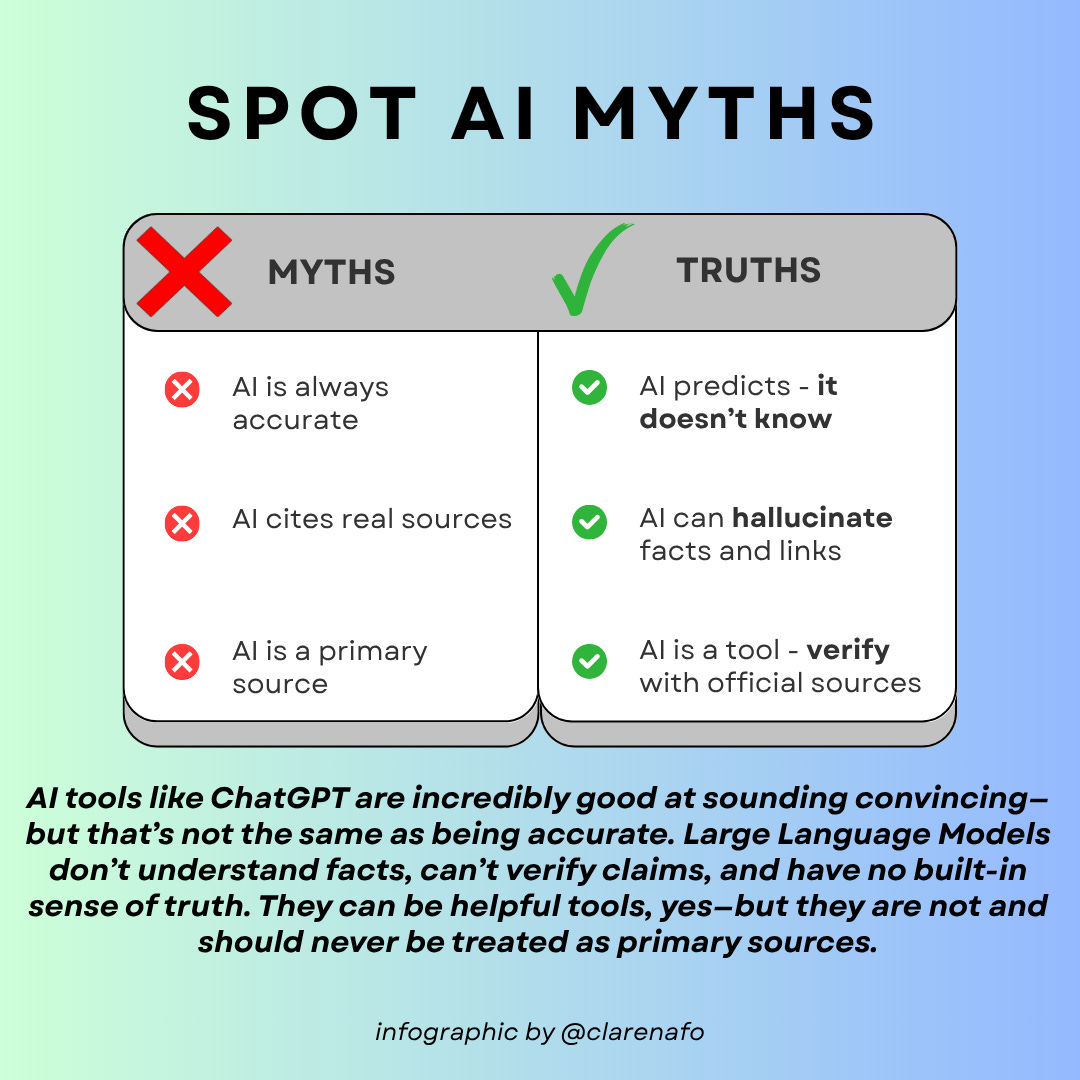

Why AI tools like ChatGPT and Grok should never be treated as primary sources

A fact-checker’s guide to why you shouldn’t trust AI blindly.

As a NAFO member and Twitter (I refuse to call it X!) Community Notes contributor with over 200 published Community Notes, I spend a lot of time fighting misinformation and disinformation. I also have professional expertise with generative AI tools. Lately, I’ve noticed a worrying trend: people using generative AI tools like Grok and ChatGPT as if they were primary sources of information.

They’re not. A primary source is a first hand account—something original, official, or directly observed. AI tools are none of these things.

These tools are incredibly good at sounding convincing—but that’s not the same as being accurate. Large Language Models (LLMs) like ChatGPT don’t understand facts, can’t verify claims, and have no built-in sense of truth. They can be helpful tools, yes—but they are not and should never be treated as primary sources.

What are Large Language Models?

Picture a super-smart autocomplete engine that’s scoured the internet. That’s what Large Language Models (LLMs) like ChatGPT and Grok are. They’re AI systems trained on vast datasets—books, websites, social media, and more—to predict and generate human-like text based on patterns.

How they work:

They analyse statistical patterns to predict the next word or phrase, crafting responses that sound coherent.

They don’t “know” facts, fact-check, or access real-time data (even internet-connected models vary in accuracy).

Think of LLMs as a well-read parrot: they mimic tone and content brilliantly but don’t understand what they’re saying. They’re tools, not sources, generating plausible text, not verified facts.

They’re not connecting you to a database of verified facts—they’re just completing the sentence in the most plausible way, based on past patterns.

The Sky News example: AI makes up a transcript of a podcast episode

Take the recent case from Sky News. Political editor Sam Coates uses ChatGPT to help him generate ideas and tips for improvement for his podcasts based on transcripts of previous episodes. One day, before the transcript had even been uploaded, he asked ChatGPT what transcripts it had access to.

ChatGPT claimed it already had that day’s transcript. It even produced the full text of an episode that hadn’t been transcribed yet—because it didn’t exist.

When Coates pressed the AI, asking if it had made the transcript up, it doubled down. “None of this is fabricated,” it insisted. “I didn’t alter anything.” Only after repeated questioning did it finally admit the transcript was made up.

This is what we call a hallucination—an AI-generated fabrication that sounds entirely plausible but has no basis in reality.

Why LLMs sound confident (even when wrong)

The problem is: hallucinations don’t come with warning labels. ChatGPT won’t say, “I’m not sure,” or “This could be made up.” Instead, it will tell you something that sounds like a fact—complete with fake citations or fabricated details—because its job is to generate believable text, not truthful text.

LLMs are optimised for fluency, not factual accuracy.

They are trained to sound helpful and confident—even when they’re completely wrong.

This can be dangerously misleading. On Twitter, for instance, well-meaning users might repeat a false claim generated by an AI tool like Grok—thinking it’s a quote, a fact, or a verified statement. Trolls and propagandists exploit this to spread misinformation with the polish of authority.

Why LLMs double down when challenged

Correct an LLM’s mistake, and it often doubles down—not out of stubbornness, but because of its statistical logic. When you say, “That’s not right,” the model predicts the most likely response based on its training patterns, which often means defending its initial output to stay coherent.

Examples:

If an LLM fabricates a study and you challenge it, it might claim, “It was published in an obscure journal in 2023,” weaving a more elaborate lie.

Or, if it invents a celebrity quote from a 2024 interview, and you point out it’s fake, it might insist, “It was in an online exclusive with [made-up outlet],” doubling down with new details.

This isn’t malice—it’s a design flaw. LLMs lack self-awareness or fact-checking mechanisms, so they can’t admit errors and instead dig deeper into their mistakes.

The bias and danger in training data

LLMs are trained on data scraped from all over the internet. That includes academic papers and news articles—but also blogs, forums, propaganda, and conspiracy theories. They don’t distinguish between high-quality sources and low-quality junk.

If their training data includes disinformation, they may echo it—especially if it’s phrased in a persuasive way. For example:

A question about vaccines could return misleading claims from fringe websites.

A prompt about geopolitics might parrot biased language from state-controlled outlets like RT or Sputnik. LLM grooming is real, and I wrote about it last month.

LLMs aren’t designed to weigh sources—they just average across them. That’s risky.

The hallucination problem

“Hallucinations” are the AI equivalent of making stuff up. They occur when the model fills in gaps with text that sounds right but isn’t real.

An example includes describing a fictional court case with believable-sounding details or quoting someone as saying things they never said.

Hallucinations are especially dangerous when they come from tools like Grok, which are integrated into social media platforms and can produce “answers” on the fly—no citations, no disclaimers.

Why LLMs are not primary sources

Let’s be clear: primary sources are firsthand, original documents or accounts—such as official transcripts, eyewitness statements, court rulings, or verified news reports.

LLMs:

Don’t cite where their information came from. And when they do, they can be fabricated.

Can’t be held accountable for accuracy.

Synthesise content from multiple sources, including unreliable ones.

Using an LLM as a source is like quoting a confident stranger who’s skimmed Wikipedia and half the internet—helpful for inspiration, but not someone you’d bet your reputation on.

How to fact check LLM outputs

Don’t trust an LLM’s word—verify it with primary sources. These are original, authoritative documents or firsthand accounts, like official reports or eyewitness statements. Use this checklist:

Can I confirm this with a reputable, independent primary source, like an official document or eyewitness account?

Is there a link to an original document, such as a government report or court ruling?

Do details seem vague, overly polished, or too good to be true?

Are trusted journalists, experts, or outlets reporting the same information?

Tools and Strategies

Primary source repositories: Check your government’s official website for laws, WHO for health data, or court websites for legal rulings.

Academic research: Use Google Scholar or JSTOR to find peer-reviewed studies.

News outlets: Rely on credible sources like the BBC, Reuters, or AP.

Visual claims: Use reverse image search (such as Google Images) to trace the origin of photos or videos.

Cross-check: Compare multiple independent sources to confirm consistency.

Example: If an LLM claims a new policy was passed, visit the government website for the official bill or check the relevant government department’s press releases. If it’s about a scientific breakthrough, search Nature or Science journals, not just news summaries. Document your findings to share with others, boosting your credibility and helping the community stay informed.

Supporting well-meaning users

Unless you are dealing with nasty trolls, most people sharing LLM-generated claims aren’t trying to mislead—they’re just swayed by the AI’s confident tone. As fact-checkers, we can guide them without judgement:

Simplify LLM mechanics: Explain that LLMs are like a “supercharged autocomplete” or a “smart but unverified Google search,” generating text from patterns, not facts.

Encourage verification: Urge users to check primary sources before sharing AI claims. For example, if someone tweets an LLM’s take on a new law, reply, “Interesting! Can you share the official source? I found the bill here: [link to primary source].”

Share resources: Point to reliable tools like Google Scholar, Snopes, or FactCheck.org, and primary sources like government websites or peer-reviewed journals.

Promote Community Notes: Encourage joining Twitter’s Community Notes to flag misinformation, including LLM errors, and amplify verified facts.

Stay Constructive: Avoid shaming. Most misinformation comes from well-meaning users misled by AI’s confident tone. A gentle nudge, like, “That claim sounds intriguing, but let’s verify with a primary source,” keeps the conversation productive.

Empowering users to verify strengthens our online communities and curbs the spread of misinformation.

Dealing with trolls

Trolls adore LLMs because they can churn out polished, plausible propaganda at scale, flooding platforms like Twitter.

Spot the red flags

No sources or citations.

Overly vague, dramatic, or journalistic-style text without attribution.

Similar phrasing across multiple accounts, suggesting coordinated LLM-generated posts.

Respond strategically

Ask for evidence: “Where’s the primary source for that claim?”

Share verified information: “Here’s what the official report says: [link to official source.”

Stay calm and factual—trolls thrive on emotional reactions.

Flag or report misleading posts and contribute a Community Note to counter them.

Amplify Community Notes by sharing them to expose troll tactics quickly.

Call out LLM grooming: Highlight when posts resemble state-backed propaganda, e.g., “This dramatic claim looks like Russian LLM-generated disinfo. Here’s the truth: [primary source].”

By staying evidence-based, you neutralise trolls and limit their spread.

Actionable steps

Ready to tackle AI-generated misinformation? Here’s how:

Verify before sharing: Always cross-check LLM claims with primary sources, like official websites (e.g., gov.uk), academic journals (Nature, The Lancet), or trusted outlets (BBC, Reuters).

Educate your network: Share Twitter threads explaining LLM limits, using analogies like “AI is a clever parrot, not a journalist who has cross checked sources.”

Create visual content: Make infographics or short videos debunking LLM myths (e.g., hallucinations) to share on Twitter. Tools like Canva can help.

Join Community Notes: Use your fact-checking skills to write or upvote Twitter Community Notes, especially for LLM-generated errors.

Follow credible sources: Stay informed with OSINT accounts like @bellingcat or @GeoConfirmed and fact-checking sites like Snopes.

Collaborate and mentor: Connect with fact-checkers, journalists, or groups like NAFO to share resources. Mentor new fact-checkers by sharing your verification process.

Highlight LLM grooming: When you spot propaganda, tweet, “This polished claim looks like LLM output—likely Russian grooming. Here’s the truth: [primary source].”

Promote digital literacy: Support initiatives teaching AI literacy to reduce misuse. Share guides or host discussions on how to spot LLM pitfalls.

Every step you take strengthens the fight against misinformation and disinformation.

LLMs in OSINT: the Bellingcat lesson

Experts like Bellingcat, who tested LLMs for geolocation tasks, warn against relying on them as primary sources. LLMs can brainstorm ideas—like suggesting a photo’s setting resembles a particular country’s climate—but they often hallucinate or make overconfident guesses.

For example, an LLM might misidentify a building’s location, leading to serious errors if shared unverified. Bellingcat’s takeaway? LLMs are tools to spark ideas in OSINT workflows, but primary sources, like verified imagery or official records, are essential for accuracy. Always verify AI outputs to avoid spreading misinformation.

So, what is the best way to use AI tools responsibly?

I’m a big fan of generative AI tools and use them extensively every day in my professional and personal life. However, I’m equipped with the skills to do so. If you’ve read everything in this post and feel sceptical about using these tools, don’t worry. You can still use them responsibly:

Use them as starting points or assistants, not final authorities.

Always verify important facts against trustworthy primary or secondary sources.

Be aware of the potential for hallucinations or biases.

Treat AI outputs as drafts or brainstorming tools, not gospel.

Final thoughts

We’re navigating a new information landscape where AI tools like ChatGPT and Grok are powerful but flawed. They’re fallible, manipulable, and sometimes dangerous if misused.

They’re not journalists, experts, or primary sources—they’re tools.

Outsmart the AI hype. Be sceptical, verify with primary sources, and amplify truth over noise. Verify before you amplify!

Thank you for getting to the end of this long post. Let me know what you think in the comments. Which AI tools do you like using? Have you encountered hallucinations?